I normally agree with much of what Think Progress writes, but not in one of its latest pieces on how Senate Democrats stand on Gorsuch appointment. It’s interpretation of their stand is obvious from subheadings of “Team Spine”, “Team ¯\_(ツ)_/¯”, and “Team Trump”.

I am not happy with a Gorsuch appointment because of his strong beliefs against the Chevron Defense. This legal standard has allowed federal agencies to survive challenges to laws typically related to the environment and endangered species. He’s also a conservative judge when we had a chance to appoint a moderate who would actually make decisions based on the law, not ideology.

At the same time, Gorsuch is a much better option than some of the judges Trump was considering. And these latter judges may be up for consideration in the next few years if another Justice retires or dies. Gorsuch has demonstrated a willingness to buck conservatives in decisions if he interprets the law in a way that’s independent of their reasoning.

If I were a Democratic Senator, I wouldn’t vote for Gorsuch, but I wouldn’t filibuster his appointment, either.

By not voting for Gorsuch, I’m signaling that I believe the seat he’ll feel is a stolen seat. I’d want him to know that this “stolen seat” legacy will follow him throughout his years, and his decisions. I’d want him to think about this legacy, especially in light of his professed love of the law. I’d want him to remember that Merrick Garland is highly qualified, more experienced, and was lawfully appointed by the President of the United States.

However, by not filibustering him, I am acknowledging that he is a better option than what we could face from Trump. It’s a long two years until the Senate race when we can hopefully take back the Senate. The Senate Republicans will destroy the SCOTUS filibuster rule if Democrats filibuster Gorsuch. Guaranteed.

Gorsuch will still be judge, but we’ll have lost the ability to force in-depth, stringent confirmation hearings for future appointees; hearings that may cause some Senate Republicans to question whether what’s being said in these hearings is worth the possible hit in their upcoming re-election bid. Because there’s a whole lot of Republicans coming up for re-election along with the Trump administration in 2020.

With the filibuster in place, Senate leaders are going to be more willing to allow for lengthy, robust questioning during the committee hearings, in hopes of appeasing those who might be thinking of filibustering the appointment. Without the threat of filibuster, why should they waste time when they know the control the votes to just vote the person in?

A longer confirmation process may expose information that not only is game changing for Democrats but could be game changing for some Republicans. Republicans who might actually join the Democrats…especially if they’re coming up for re-election.

That’s my take. Others, including many Democratic Senators, have different takes. Regardless of how each Senator handles the Gorsuch appointment, one thing I’m adamant about: I’m not turning this into a purity test for Democrats.

Purity tests, inspired by Sanders and sledgehammered by Jill Stein, helped us lose the election and put Trump into the Presidency. Trump’s Presidency has already hurt people. Trump’s Presidency has already killed people.

That Garland isn’t a Supreme Court Judge is the Republicans’ fault. That Gorsuch and not Garland is the current choice is Trump’s fault. Trump, his cabinet, and the Republican-controlled Congress are, and will remain, my only targets. My entire focus is to limit the damage this Congress and President can do, and to kick them all out in the next three federal elections. To me, nothing else matters.

Too much is at stake to play purity games.

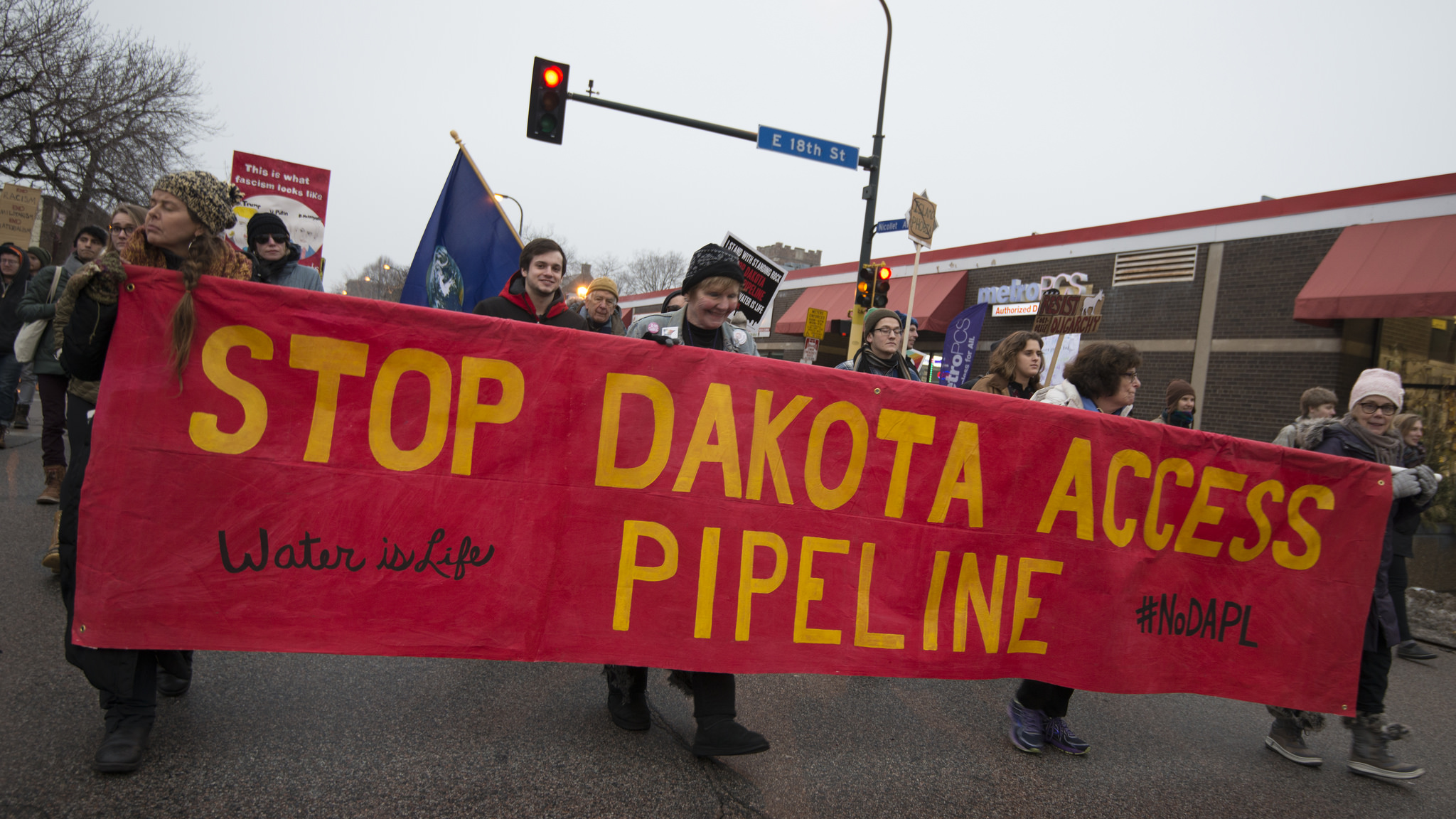

Photo courtesy of Elvert Barnes CC BY-SA 2.0