Silent Sunday March 10 2024

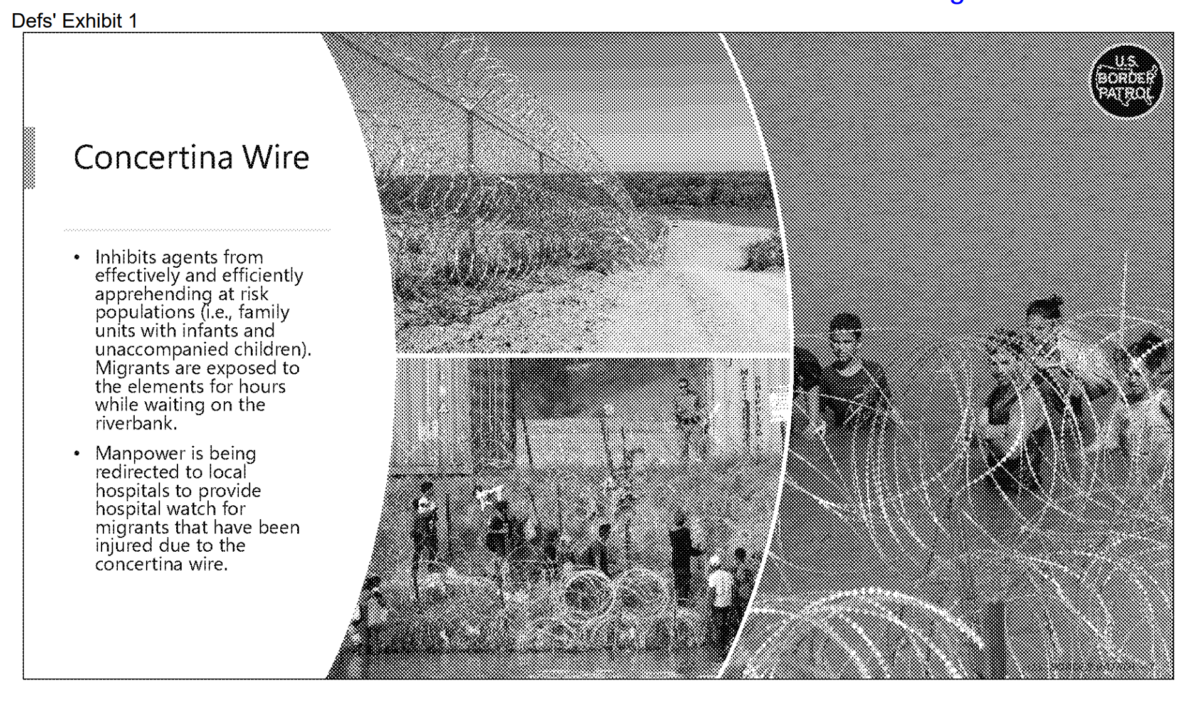

Today the lawyers from the US and Texas are meeting in a courtroom with Judge David Ezra over the fate of Texas’ SB4. This law that Abbott signed into existence basically turns over federal immigration powers to the state—a blatantly unconstitutional act that should abruptly end if our courts followed the law.

I particularly like the US reply in response to Texas’ continued assertions that it can protect itself from an ‘invasion’. Texas repeatedly brings up Madison in support of its claim. In the US filing, lawyers quote Madison from the 1805 second edition of *Debates & Other Proceedings of the Convention of Virginia, arguing that to Madison, an invasion was a hostile act from a sovereign entity.

Texas invokes James Madison’s discussion during the Virginian Ratifying Convention about the use of state militia to stop smugglers. PI Opp’n at 26. But as Texas acknowledges, id., Madison’s discussion was in response to concerns about Congress calling forth militia to execute federal law. See Debates & Other Proceedings of the Convention of Virginia, 292–94 (2d ed. 1805). And when Madison did discuss “invasion” in the context of the Invasion Clause, U.S. Const. art. IV, § 4, he recognized that it must be conducted by sovereigns. See, e.g., Debates of the Convention of Virginia, 302 (2d ed., 1805) (“[the States] are to be protected from invasion from other states, as well as from foreign powers.”); The Federalist No. 43 at 293 (Cooke ed.1961) (“A protection against invasion is due from every society to the parts composing it. The latitude of the expression here used seems to secure each State, not only against foreign hostility, but against ambitious or vindictive enterprises of its more powerful neighbors.”).

The US case is strong, but what if the courts find for Texas?

Reading through the Texas court documents in this and the other Texas border lawsuits, in particular remembering what Fifth Circuit Judge Ho said about ‘weaponizing’ migrants becoming an invasion, makes me think that if the courts were to find that Texas is right, then Abbott’s acts in shipping migrants to cities in Democratic states like Illinois and New York is, in their interpretation, an invasion.

After all, this act is a weaponized flow of migrants, is it not?

If so, then following the legal logic that Abbott and Ho proffered, states like Illinois should be able to declare war on Texas. Not just Illinois, but California, Colorado, New York, and Pennsylvania could also declare war. After all, why should Texas be the only state allowed to unilaterally act during an ‘invasion’?

If this is true, then one could extend Illinois’ declaration of war to include states like Florida, which has also weaponized migrants in an attack on Democratic states. Or even my own state of Georgia, whose Governor, Kemp has decided to send National Guard members to Texas ‘in support’. Why Shouldn’t Illinois declare war on Florida and Georgia?

After all, Illinois hasn’t done anything other than scramble to find warm clothing and shelter and food for the migrants. It hasn’t done anything to cause harm to Texas, Florida, and Georgia. Illinois is the innocent state suffering the consequences of hostile actions initiated by other, sovereign, states. I would think war would be the natural outcome of these events.

Fair’s fair.

—

*If you want to go to the source, you can find copies of the Notes online, but be forewarned about the typographical long s, which looks an ‘f’ and takes some getting used to.