Elon Musk’s Twitter antics are getting harder to ignore, so I’ve joined with others to look at social media competitors in hopes of finding that perfect Twitter alternative.

Hint: there aren’t any.

The Trouble with Tribel

The first app I checked out is Tribel, a social media app created by Omar Rivero, also known as the founder of Occupy Democrats.

I verbalized Tribel as “tribble” right at the start, which made me inclined to like the service. However, friendly associations aside, Tribel is trouble.

The first red flag for the service was when it asked for age and gender when signing up. There’s absolutely no reason for this type of information unless the people behind Tribel plan on doing some data gathering. If you don’t want the kiddies, then just put a disclaimer in at signup requiring that the person be over 18.

Hmmm.

Once reluctantly passed the intrusive sign on, the next roadblock is figuring how the system works.

Tribel doesn’t seem to have the word count limitations of Twitter, and as someone pointed out, you can edit your posts. But the system also forces you into behaviors that are annoying.

For one, you can’t just do a post and publish it to the world. You have to pick your audience, and then you have to select from a gawd-awful huge list of topics and sub-topics. If you choose to submit a personal post, then you can only share it with friends. If you do pick a topic, then it asks if you want to be a Contributor, when all you really want is to publish a damn post.

You could look beyond these design fails, but how people treat you on the service is something you can’t ignore. Woe unto you who criticizes Tribel, the software.

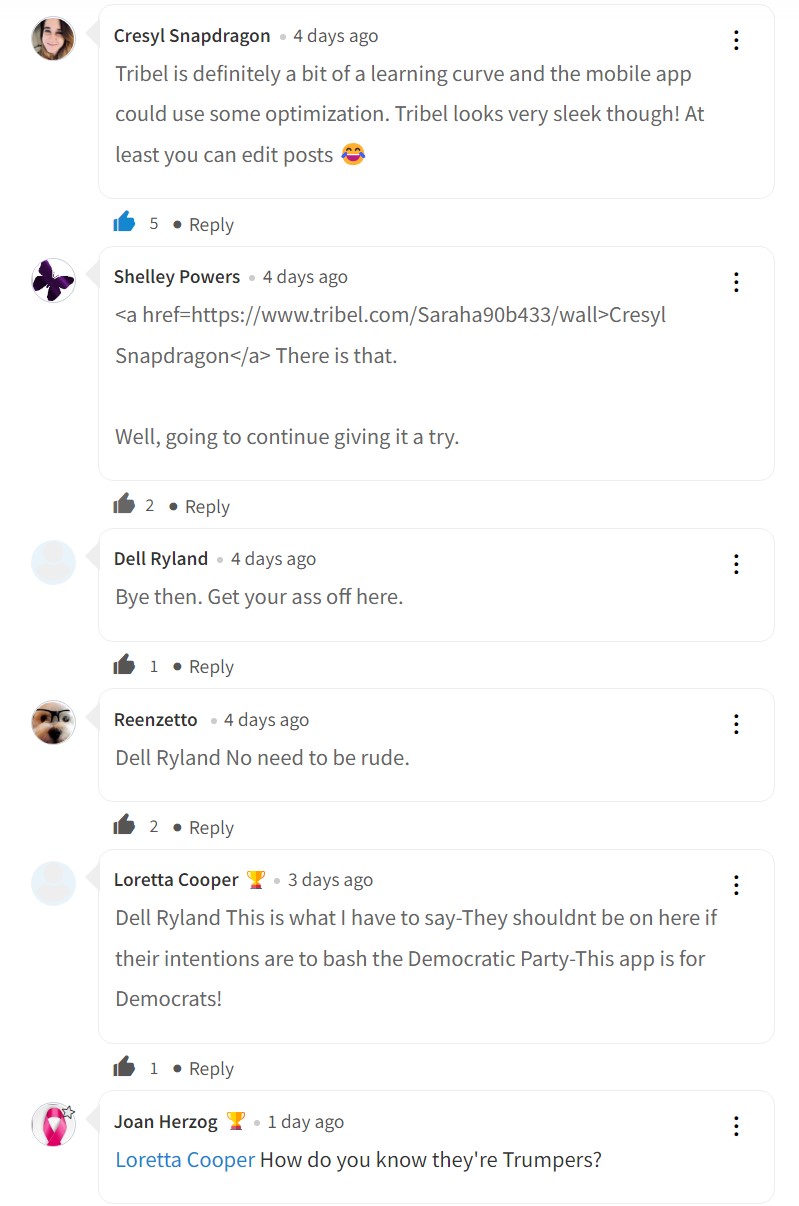

The screenshot below is an example of an exchange I went through when I expressed my unhappiness about some of the Tribel design decisions (specifically, having to choose from a gawd-awful list of topics). I don’t think in my entire life, I’ve been accused of being a traitor to the Democratic cause solely because of tech criticism.

This screenshot demonstrates the biggest problem with Tribel: it is an echo chamber, similar to TruthSocial but falling over on the left. That the members are Democrats, or progressive, or liberal doesn’t matter: it’s an echo chamber; an echo chamber that exhibits zero tolerance for dissent.

I’m a Democrat and a progressive and a liberal…but I’m not a clone or a cult member.

Tribel promises to be a “kinder, smarter network.” It’s anything but.

counter.social and the 90s live again!

The second social media app I tried was counter.social. Unlike Tribel, it’s fairly simple to post…once you get past all the 5xx errors from a service that’s being hit with a lot of new signups at the moment (a problem all the apps are experiencing right now with the sudden interest).

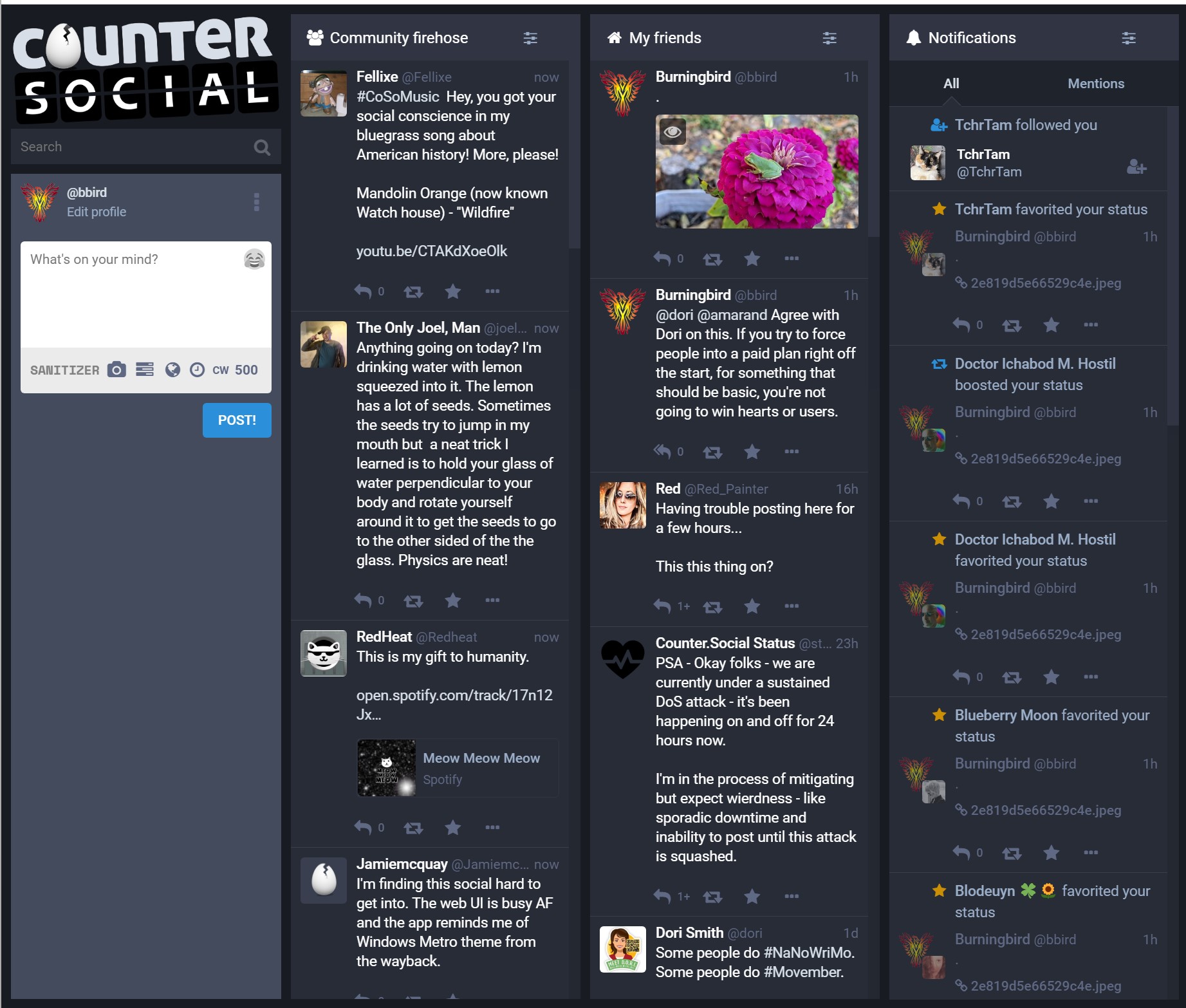

Once you can access the site, your first thoughts might be, “The 90s called, and they want their web design back.”

It actually features a scrolling banner at the bottom. Wow, when was the last time you saw a scrolling banner? The rest of site is a jumbled mess of columns, all white text on dark background and featuring a lot of ‘stuff’ including that scrolling banner.

Thankfully, counter.social does feature an ostrich mode in preferences that turns off much of the cruft, including the banner. You can access preferences by clicking the three dots next to your profile.

There is no option to change the coloring to dark on light. or make it less messy. The most you can do is actually make it more messy by adding more columns of stuff to the page.

Additional functionality including creating groups and lists and modifying the appearance is behind a subscription paywall. The amount you have to pay isn’t very much ($4.99 a month), but having to pay for what should be basic functionality isn’t necessarily conducive to increased participation.

I did find the folks on counter.social to be quite friendly. The service is still small enough to have a nicely intimate feel to it. Two things, though, don’t work for me.

The first is the design and layout, which is just too busy and overwhelming. It’s hard to see what’s going on. Even in Ostrich mode, it’s too busy. I suspect even if I could switch to a dark on light background, it would still be too busy.

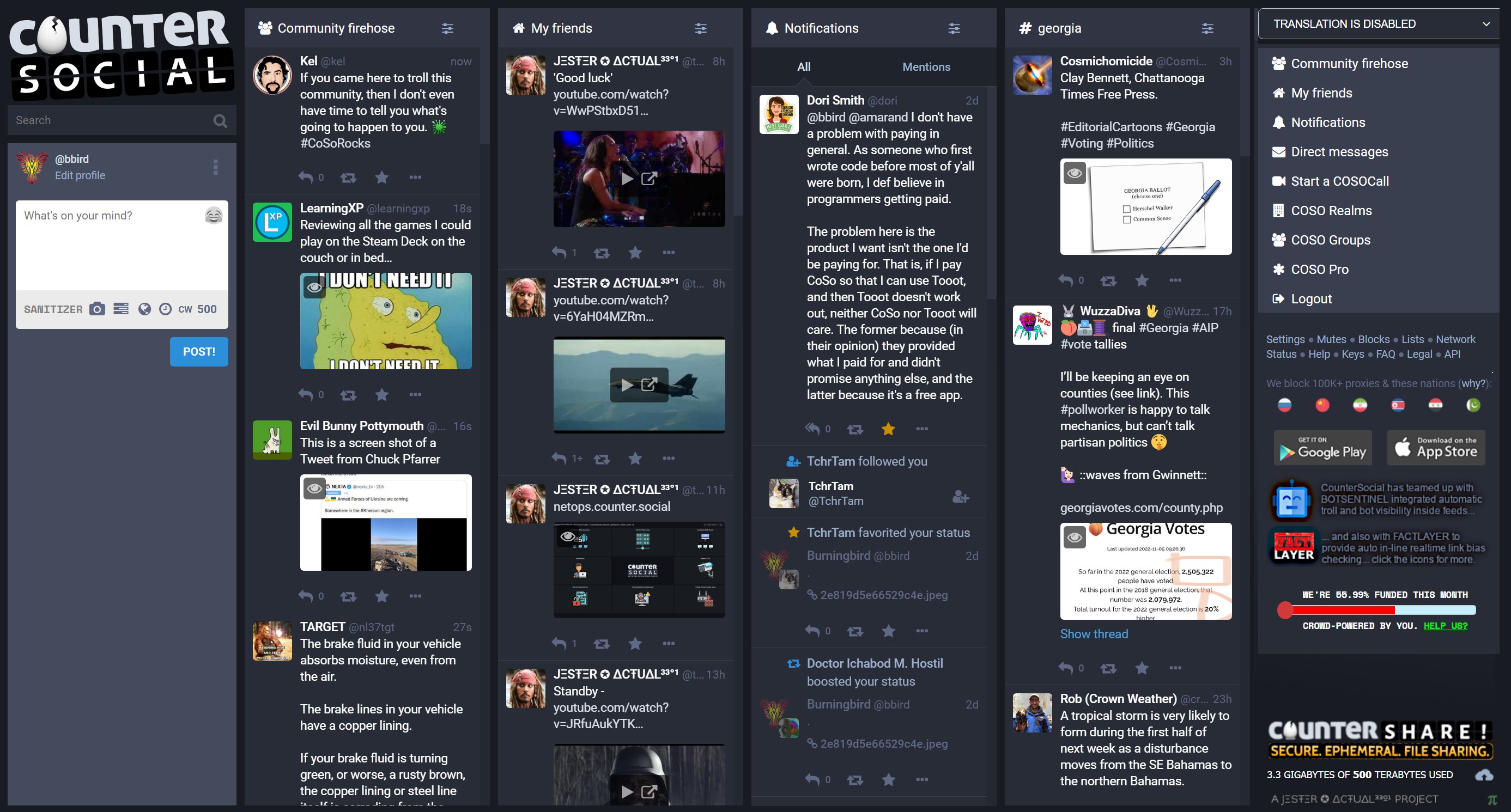

The second concern—and the primary concern—is the fact that the service is controlled by one person.

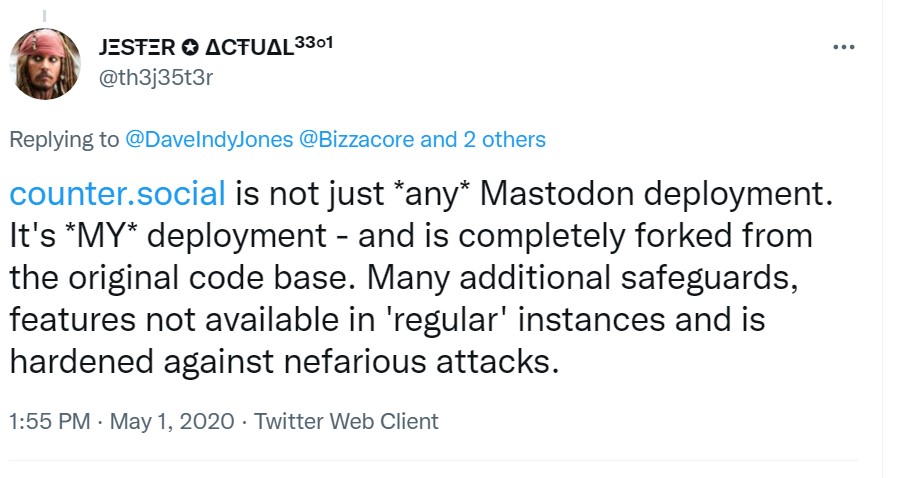

The counter.social app, itself, is a fork of Mastodon (discussed next), by The Jester, a very well known hacktavist. In real life, The Jester is a man named Jay Bauer.

The counter.social site promises a hate-free environment, and I have no reason to doubt this isn’t true. Moderation takes resources, though, and we have no idea how many resources counter.social has.

The funding for the site is a month-to-month operation. That’s one of the actual design elements: a progress bar tracking whether the month’s funding goal has been met. The site does tend to make its funding fairly quickly during the month, but the nature of the funding and ownership make the service very precarious.

Frankly, I don’t want to trade one service that was purchased by a billionaire with another that could easily disappear or be sold.

I quit counter.social after my first impressions, but then decided to continue giving it a try (I’m @bbird). I might be able to learn to live with the 1990s design, but that single owner is likely to be a no-go for me. This leads me to the next social media app, which goes from one owner to no owner.

I’m on Mastodon. Somewhere.

Mastodon is a fascinating social media application, because unlike Twitter, or Facebook or counter.social, no one owns it. Or, I should say, everyone owns it.

Mastodon is a federation of individual servers based on open source software and protocols managed by different groups or people located all over the world. When you sign up for Mastodon, you don’t sign up at a single entry point: you locate and find a server you’re interested in, and then sign up at it.

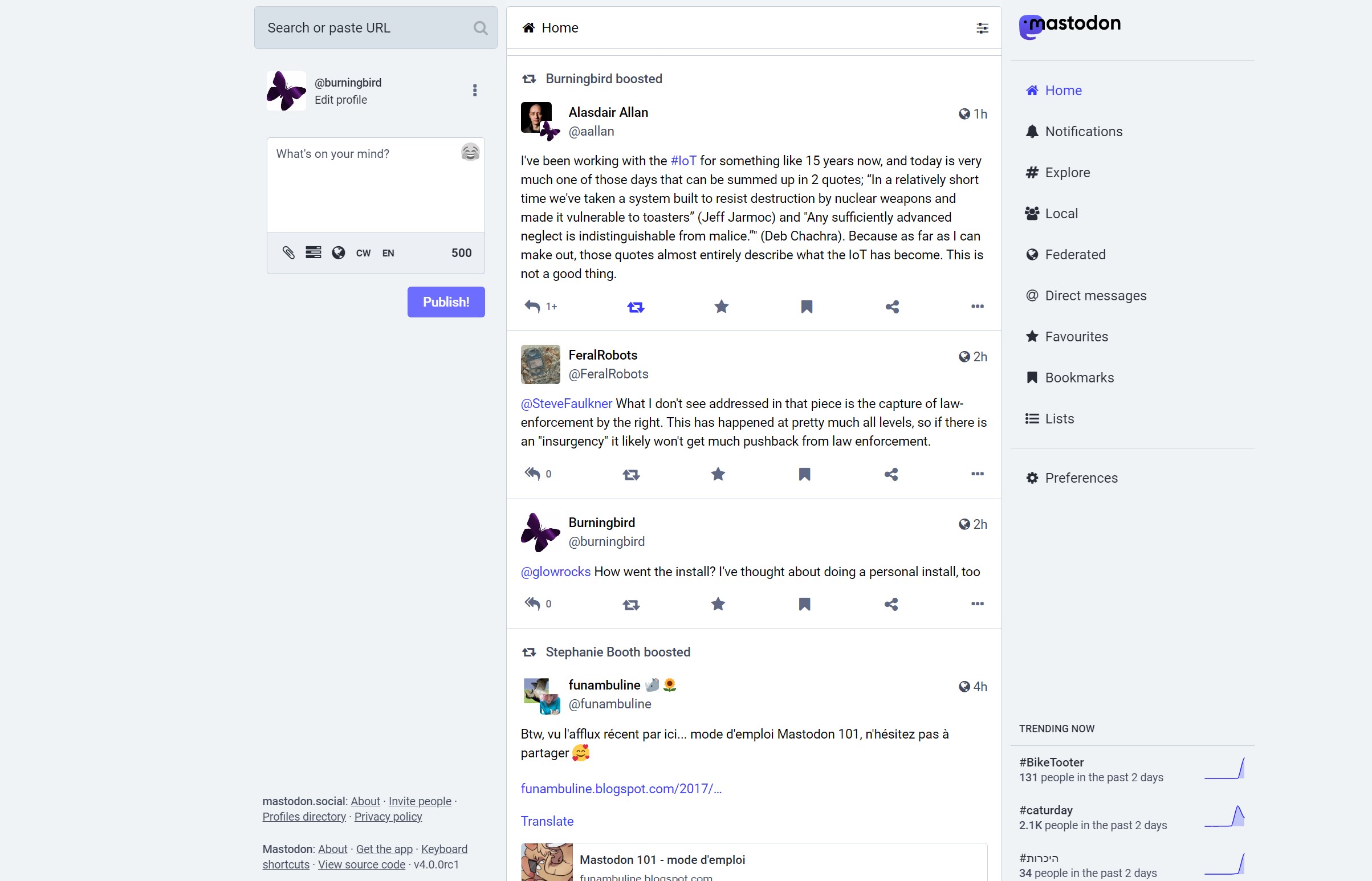

Once signed up, though, people can follow you regardless of what server they’re on and you can follow them back. So, I’m signed up at mastodon.social as @burningbird, but I can follow @someuser at phpc.social, and my posts show up for them, and their posts show up on my home page.

Each server may or may not have a waiting list, and each server sets its own moderation rules. In addition, each server may monitor or block other servers that they deem to be the source of spamming, hate, pornography, or misinformation. As an example, you can see a list of filtered, limited, and suspended servers for the Fosstodon Mastodon, to get an idea what type of servers do get moderated and blocked.

In addition, you can sign up at multiple servers if you wish. I’m @burningbird at mastodon.social, but I’m also @burningbird at phpc.social, and @burningbird at fosstodon.org. I can keep the separate accounts, or if I decide to stay with just one, I can migrate all my follows/followers to the Mastodon server of my choice. If I do migrate my account from one server to another, we’ll still be connected, and you won’t even know I’ve moved.

Best of all, I can install and setup my own Mastodon server at burningbird.net, and join into the federation—something I am seriously considering. The only downside to this approach is that I won’t have access to folks on a local server when I run my own. Which is why I may stay with an existing server, and why it’s important to sign on to a server that best matches your interest.

(If I do install Mastodon, it would be for personal use. I’ve done the running a server for multiple people in the past, and it was exhausting and very stressful.)

Of course, the freedom to sign up at multiple servers is also one of the problems with Mastodon: there’s no way to know who is authentic and who isn’t. I’ve signed up as @burningbird at three different servers. Someone else can sign up as @burningbird at other servers, and you won’t know who is who without some other way of authenticating the individual. In most cases, you’ll have to find the correct Mastodon user by following a link they’ll provide either at a web site, or other social media app.

(Note that Musk doesn’t consider authentication to be a big thing, since he’s turned the famous Twitter blue authentication checkmark into a marketing brand anyone can buy. I like what one person wrote on Twitter: the blue checkmark will become the equivalent of posting an Amazon Prime subscriber badge.)

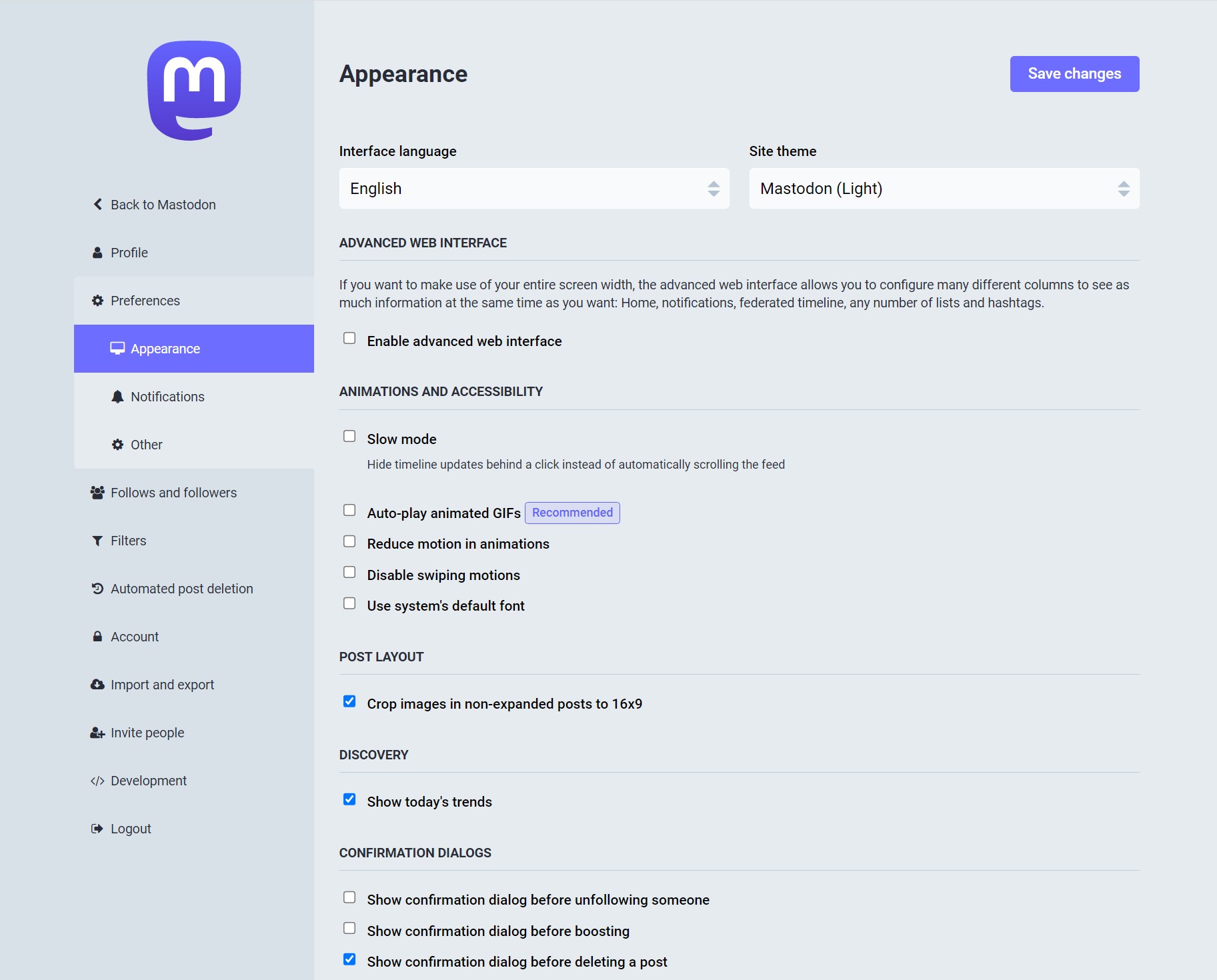

From a usability perspective, Mastodon is about the closest experience I’ve found to Twitter, notwithstanding the expected growth issues related to a sudden surge of new users. You start out with light text on dark background, but you can change to the dark text on light background in Preferences. In addition, you can change to slow mode for your feed (new posts require a click rather than automatically scrolling), set image size, determine what happens when an image is hidden and so on.

Unlike counter.social which tends to get into your face about contributing funds, the Mastodon servers typically include a request for donations in their About pages, and they’re not pushy about it. Having said this, if you do like Mastodon and you like your server and don’t want it to go away, consider contributing.

Mastodon isn’t owned by corporations, the Saudi government, or some rich guy. Because of the open source nature of the software, and the standardized open protocol of the federated access, trying to buy out Mastodon would be like trying to buy out the web or the entire internet. Not even a big bucks guy like Musk could do it.

What about Bluesky?

In the midst of all of this, Twitter’s original founder Jack Dorsey has popped up with Bluesky—seemingly his version of a federated social media app.

When I first heard about it, I signed up for the beta. If I get invited, I’ll probably check it out for grins and giggles. But will I stay with it? Unlikely.

To me, the biggest strike against Bluesky is the fact that Dorsey chose to go his own way on designing the federated protocol for Bluesky—the AT protocol—rather than work with the open source and open standards community. This type of arrogant indifference to open standards and its “I know what’s right, and I’m doing it my own way” attitude just stinks. I’ve seen it too much and have fought against it for years. I certainly don’t need to buy into it because one technocrat thinks he know better than anyone else.

Dave Troy touched on much of this in an in-depth piece that discusses Dorsey, his relationships with Musk, and their world views. What he wrote made me wary even before discovering the AT protocol. Read it, and form your own judgement.

Ultimately, it’s not the application or the technology: it’s the people

After testing the three tools, I’ve decided to stay with Mastodon. I’m still exploring the network, still considering what server I want to live on, but what I’ve seen pleases the open source “can’t be owned by rich assholes” part of me.

However, I’m not quite ready to give up Twitter, and it’s not because I’m enamored of the app. I actually find Mastodon to be better tech fit for me. No, leaving Twitter means leaving the best part of Twitter, the part that Elon Musk can’t and will never understand:

The people.

I have built relationships with folks out on Twitter. I have a good group of very smart people I follow and interact with. They’re in technology, Constitutional law, food safety, the environment, politics, news, and life. They can write amazing things in a very small space. They can convince, inform, instill wonder, spark outrage, inspire thoughtfulness, and make me laugh.

A platform’s technology is such an unimportant component of social media. Yes, you want to prevent security hacks, and you need to scale your app to fit the demand. Social media applications are complex and take real skill to manage. I’m not disparaging the abilities of the people who maintain a social media app.

But it’s the people that make the social media app, not the other way around.

Elon Musk doesn’t understand this. He never will. And it’s why I’m investing time in other platforms and encouraging others to do the same. Because someday I hope all the wonderful people I connect with on Twitter will be somewhere else, and I can kiss Twitter good-bye.

And in case you decide to pursue a Mastodon account, find me at @burningbird@mastodon.social. Or you can always find me here, at Burningbird.