The writing is what’s important. Everything else is just candy sprinkles.

Category: Technology

Rebooting Weblogging?

I haven’t been out to scripting.com for a long time, and was surprised to find Dave is not using https. Of course, the page I tried to access (on rebooting weblogging) triggered a warning in my browser. It triggers a warning in any browser, but some are more severe than others. There were issues with […]

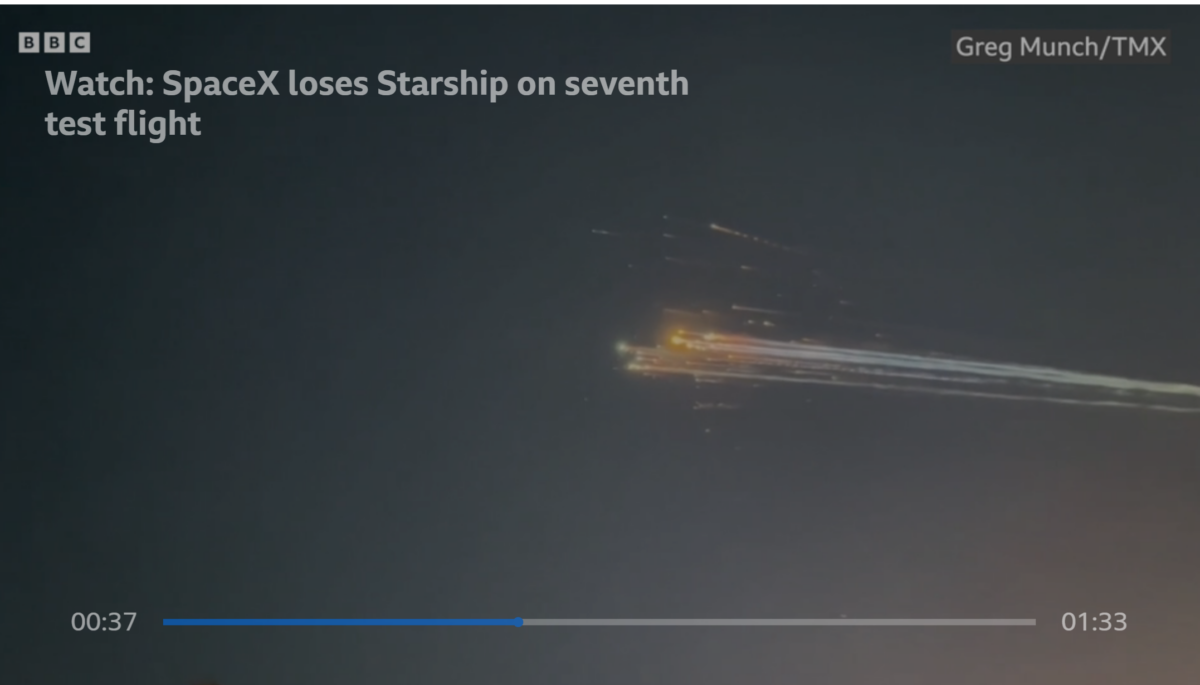

Musk, DOGE, and the phantom promise of AI

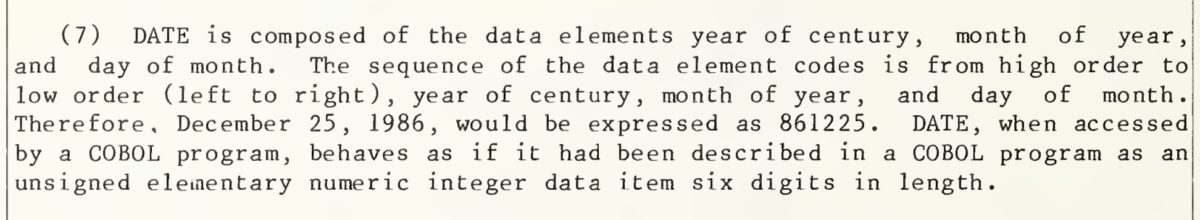

No, the Social Security ‘system’ did not default to a date of May 01, 1875 when a date is missing.

I just installed the ActivityPub plug-in for WordPress. With it, you can follow posts here at Burningbird by following bosslady@burningbird.net. Supposedly, then, this post would show up on Mastodon as a new post. There’s also a plugin that would allow my WordPress weblog to subscribe to one or more folks on Mastodon, and publish their […]