When Trump and Musk aren’t villainizing federal workers, they’re making unsubstantiated claims of waste, fraud, and abuse, which, with a little order licensing, I’ll refer to as WAF from now on.

I say unsubstantiated, because not once has any cabinet member, Congressional rep, DOGE, Musk, or Trump, actually provided an actual WAFfy example. They indulge in a great deal of speculation, innuendo, and accusations. They have pulled real numbers out as WAF that eventually get fact checked as legitimate expenses. But no real WAFfy examples.

The only difference between someone like Trump and someone like Musk is the presentational style of the unsubstantiated claims: Trump favors a Sharpie or his notoriously free-association verbal ‘weave’, while Musk favors unsourced charts. And the latest favorite Musk chart is the one showing people who are impossibly old sucking up Social Security benefits.

Who are all these 150 year olds claiming Social Security! WAF!

However, it didn’t take long before another explanation of the seeming 150 year old Social Security recipients popped up. Since the DOGE script kiddies are so fond of AI, let’s use AI to explain:

What happens when a date is missing in a COBOL program

iAsk answered:

According to www.iAsk.ai – Ask AI:

What Happens When a Date is Missing in a COBOL Program

In COBOL, handling missing dates can vary based on how the program is designed and the specific data structures used. Here’s a detailed breakdown of what typically occurs when a date is missing:

1. Data Structure Design:

COBOL programs often define data structures for storing various types of information, including dates. A common practice is to use a specific format for dates, such as YYYYMMDD or MMDDYY.

If a date field is defined but not populated (i.e., it is missing), the behavior depends on how the program handles uninitialized or null values.

2. Default Values:

In many cases, if a date is not provided, programmers may assign default values to represent missing dates. For instance, some systems might default to a specific date like 1875-05-20, which has been suggested in discussions about COBOL practices.

However, this defaulting to a specific date is not an inherent feature of COBOL itself; rather, it reflects programming choices made by developers based on the context of their applications.

3. Error Handling:

Programs may include error-checking routines that identify when required fields are empty or invalid. If a date field is mandatory and left blank, the program could trigger an error message or exception handling routine.

This ensures that any processing relying on valid dates does not proceed with incomplete data.

4. Reporting and Output:

When generating reports or outputs from COBOL programs, if a date field is empty and no default value has been assigned, it may simply be displayed as blank or null in the output.

Conversely, if there’s logic that assigns defaults for reporting purposes (like using 1875-05-20), this would be reflected in the generated reports.

5. Impact on Business Logic:

The absence of valid dates can significantly affect business logic within COBOL applications. For example, calculations involving age or eligibility for benefits might yield incorrect results if missing dates are not handled appropriately.

Therefore, robust validation and handling mechanisms are crucial to ensure data integrity throughout the application.

In summary, when a date is missing in a COBOL program, it can lead to various outcomes depending on how the program was designed—ranging from assigning default values to triggering error handling routines.

Top 3 Authoritative Sources Used in Answering this Question

1. IBM Documentation

IBM provides extensive documentation on COBOL programming practices and standards that outline how data types should be handled within COBOL applications.

2. ANSI/ISO Standards for COBOL

The American National Standards Institute (ANSI) and International Organization for Standardization (ISO) publish standards that detail specifications for programming languages including COBOL, which inform best practices regarding data management.

3. Technical Articles from Retrocomputing Stack Exchange

Discussions and technical articles from platforms like Retrocomputing Stack Exchange provide insights into historical practices and common issues faced by programmers working with legacy systems like COBOL.

Sponsored Content

When it comes to programming, every detail counts, just like in snacking! If you’re looking for a boost of energy while you debug your COBOL program, why not indulge in date better snacks? Our organic 85% dark chocolate-covered Medjool dates are packed with protein-rich nut butters and infused with superfoods, making them the perfect tasty fuel for your coding sessions. Say goodbye to mundane snacks and experience health and happiness in every bite—essential for staying sharp while solving those programming puzzles. Try Date Better Snacks and elevate your snacking game today!

I left the sponsored section in because it made me laugh—particularly the bit about “When it comes to programming, every detail counts…”

Every detail counts. Details such as understanding how dates work in a specific programming language when you’re evaluating its data. Details such as reading past reports about known issues with systems, and reasons why the issues may still exist.

I’m a software engineer. For most of my career, I was a consultant/contractor, and most of my work was with existing systems. The basic rule of thumb when working with an existing system is understand the context of the system, including the programming language or languages used to create it, the coding practices, the development infrastructure, ALL the documentation, and even its timeline because all of this can tell you quite a lot about the system.

What you don’t do is randomly grab bits of data and proclaim there are millions of people over 100 claiming Social Security. Not unless you want to look like a complete jackass.

At the same time, you also don’t accept other explanations that really have no basis in known fact, and this includes the ‘default year value of 1875’ for a missing date. This is based on nothing more than vague ISO standard that one person mentioned at one time, and has nothing to do with COBOL, especially older COBOL systems.

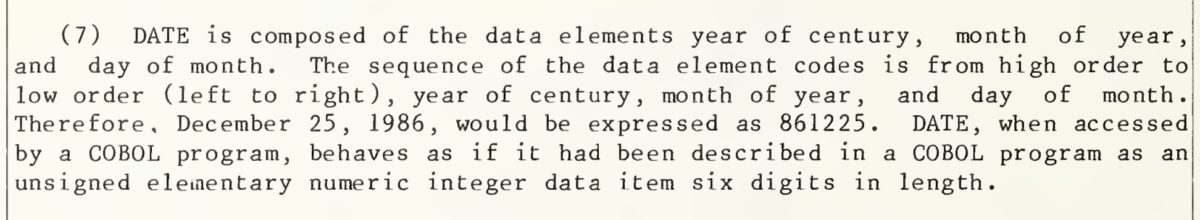

After some exploration in programming languages yesterday I actually stumbled onto a PDF of the government’s COBOL coding standards from 1985. I included an excerpt from the date section as header in this piece.

There is no date type in COBOL, and a character string is used instead. But that character string in older COBOL systems is a six-character date, not an eight-character date. The date would be given as YYMMDD, excluding the century.

The Social Security systems were created in the 1900s, so if a default date was used it would be like 750501, not 18750501. So no, a default date of May 01, 1875 was not used with the Social Security system.

However, clearing up misinformation about a default date does not exonerate Musk and the other members of DOGE. A simple review of past reports on the Social Security system provides all the explanation we need about the supposed millions of people over 100 and whether there are massive amounts of fraudulent payments to dead people.

In July 2923, the Social Security Administration (SSA) OIG reported on an audit performed on what is known as the Numident, a file (or likely, a database) of every person issued a Social Security number. When Social Security received information about a SSN holder’s death, it then updates another file (database) known as the Death Master File. It is the Death Master File that is then shared with federal benefit-paying agencies.

What the OIG audit noted in 2023, and previously noted in 2015, is there several million records in the Numident file that do not have a date of death. The OIG recommendation was that SSA use various other databases and incidental sources of data to update the missing dates of death in the Numident file, to ensure there are no improper payments, or more importantly, no use of Social Security numbers of dead people for fraudulent purposes.

The SSA disagreed. As they noted in the audit response:

We collect death data to ensure the records on which we base eligibility and payment decisions are as accurate and consistent as possible for current and future beneficiaries. When we receive a report of death, we take timely action to terminate payments. The records identified by OIG involve non-beneficiaries and do not involve improper payments. Correcting records for nonbeneficiaries would divert resources from work necessary to administer and manage our programs, which we cannot afford

It’s important to understand the two primary assertions of facts in this statement. The first is that the records in the Numident file that don’t have a death date are records of people not receiving Social Security benefits. Secondly, to update these records with an actual date of death, would cost millions that Congress had not allocated to the SSA for this task. And to just assign a default date of death by assumption, could erroneously cut off benefits for some long-lived but still living recipient.

(I added the bit about Congress in the last paragraph to remind everyone that Congress holds the purse strings for the government. In the last month, we could be forgiven for thinking this is no longer true, but until the Constitution is tossed into the waste bin, it is true.)

The SSA OIG wrote a follow-up report on the original audit in 2024. By this time, SSA had updated the death records for all but 941,383 Numbident members, moving these records to the DMF. Again, these are recipients who are not being paid benefits. However, the OIG did discover 28 people in the DMF getting benefits. At the time of the report, Social Security had cut off benefits from 14 of the recipients, and were investigating the rest.

Again, the SSA OIG recommended SSA update the death records for the remaining 915,534 Numbident records that didn’t have a matching DMF record. The response from the agency was:

SSA disagreed with recommendations 1 and 2 and stated the value of the DMF lies in its accuracy and reliability. SSA analyzed the records identified in recommendations 1 and 2 and determined they did not meet the Agency’s automated algorithm criteria, and that adding the records to the DMF would pose a significant risk of posting incorrect death information on the DMF. SSA stated a laborious manual review of each record would be necessary, and since these are records of individuals who are not beneficiaries, SSA cannot devote the resources to such an effort while experiencing a customer service crisis. SSA stated it would be willing to support this effort if any DMF customers identified a need for the information and provided funding to support a manual review. SSA agreed with recommendation 3.

Now, according to a 2022 OIG audit, there have been past instances of improper benefit payout, but as the SSA response notes, Social Security invested these and was able to recover several million dollars. And these were benefits paid to people listed as living.

In addition, if earnings are reported for someone who is supposedly dead, Social Security puts those earnings into an Earnings Suspense File and then takes effort to correct the mismatch between the reported wages and the Social Security account. All of this is detailed in an exhaustive review of the Social Security earnings processing. According to the 202r OIG report:

In Tax Years 2016 through 2020, employers and individuals

reported approximately $8.5 billion in wages, tips, and self employment income using 139,211 SSNs assigned to individuals

age 100 or older. SSA transferred these earnings to the Earnings

Suspense File, primarily because personally identifiable information provided on the workers’ earnings reports did not match information in SSA enumeration records. Resolving these discrepancies will help improve the accuracy and completeness of

the DMF and prevent future misuse of these SSNs.

The number of wage reports the SSA processes a year is 245 million, leading a total of 980 million wage reports for 2016 to 2020. The 139,211 reports that end up in the Earnings Suspense File is 0.000142 percent of the total. And this is money coming into Social Security, not money being paid.

Whether Congress needs to allocate funds for Social Security to have perfect files is a question up to Congress. But from just the reports I’ve linked (via Wayback Machine, to ensure future access since government pages have been disappearing), there is absolutely no mystery about Social Security payments and missing dates of death, and there is absolutely no proof of the millions of people getting death benefits.

As for the use of AI to somehow make all of this magically better—another DOGE/Musk claim—AI is literally nothing more than word crunching and patterns with the help of lots of computational power and data space. As the example of AI I gave earlier demonstrates, AI is incapable of discerning whether the words it is crunching are actually accurate or not.

There’s another term we use in programming: GIGO, or Garbage In, Garbage Out. May 01, 1875, indeed. Millions of people getting death benefits, indeed.

I found the OIG reports after a simple online search; one that Musk and the members of DOGE could have performed, themselves. And reports that DOGE were likely given.

Either Musk and other DOGE members are incapable of comprehending what was said in these reports, incapable of asking the existing system maintenance folks for this type of information, or they are deliberately misrepresenting the facts to somehow justify their existence. And I don’t think we need an AI program to tell us which answer is correct.